Have You Seen This AI Avocado Chair?

DALL-E Generates Images of Almost Anything from Text

What is Dall-E?

DALL-E creates images from texts. It is a transformer language model and brainchild of OpenAI released on Jan 5, 2020.

Combining the capabilities of GPT-3 to perform text generation tasks and Image GPT to generate images, DALL-E represents the confluence of the progress of these two highly successful neural networks.

What can Dall-E do?

Here comes the fun part.

Dall-E’s capabilities to create realistic, plausible images are surreal. Dall-E understands language and use the prompt as instructions to generate images.

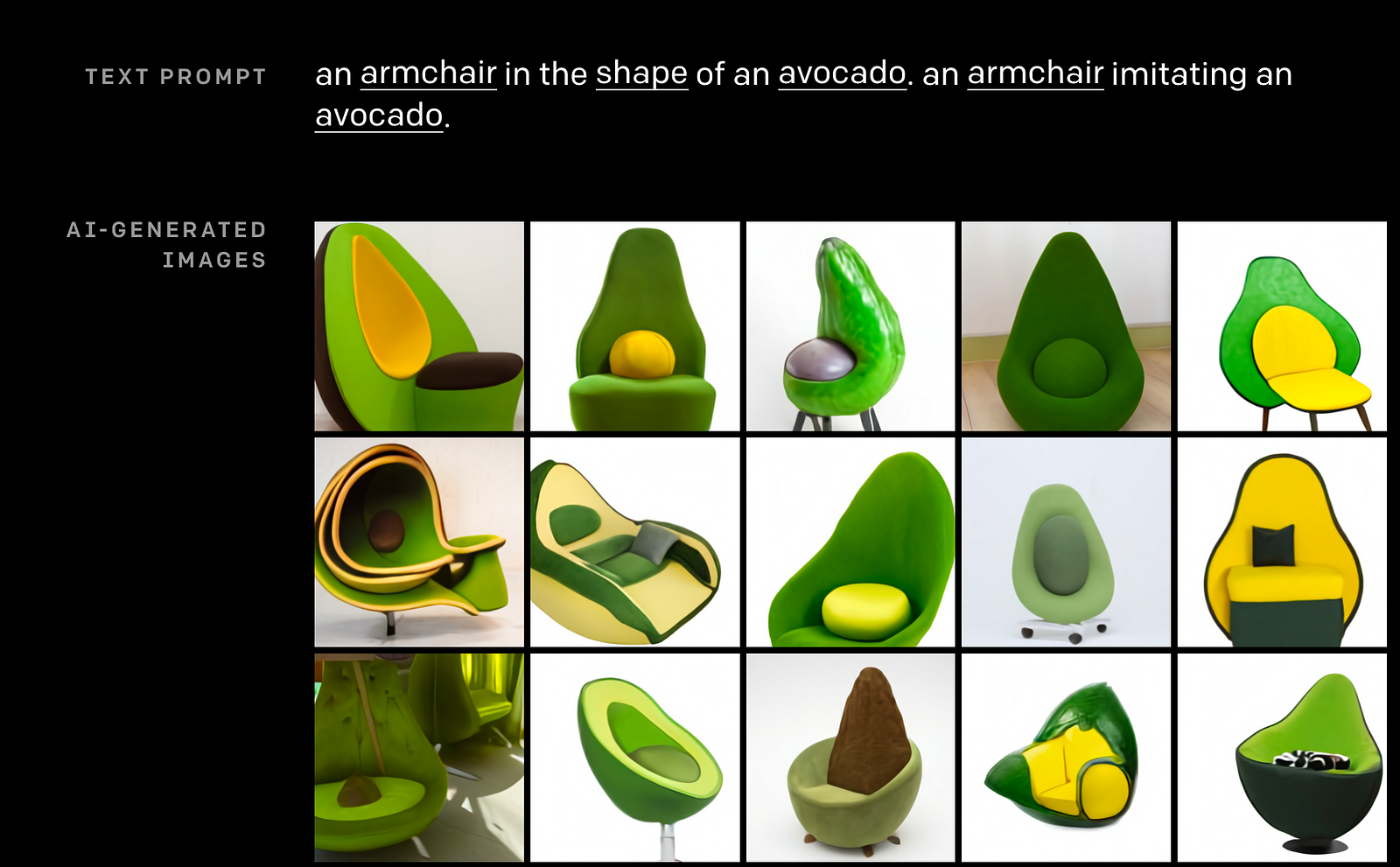

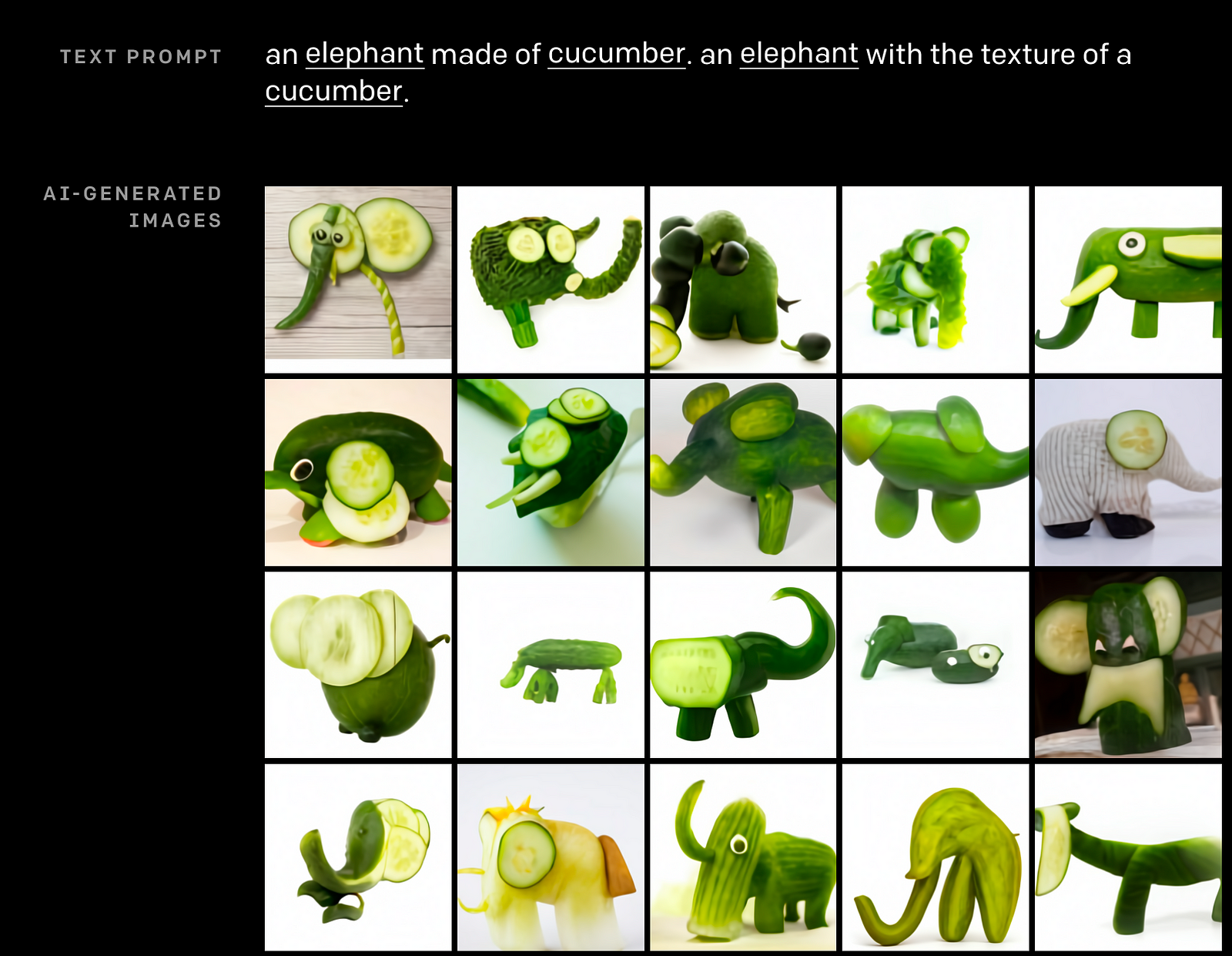

Dall-E can combine concepts and generate novel images

Astoundingly, it is able to combine multiple concepts and modify attributes of an object. Now, picture an armchair in the shape of an avocado.

Just like humans can combine the concept of armchair and avocado — and concatenate those concepts into one image, so can DALL-E, though DALL-E might have never seen an octagonal purple manhole cover before.

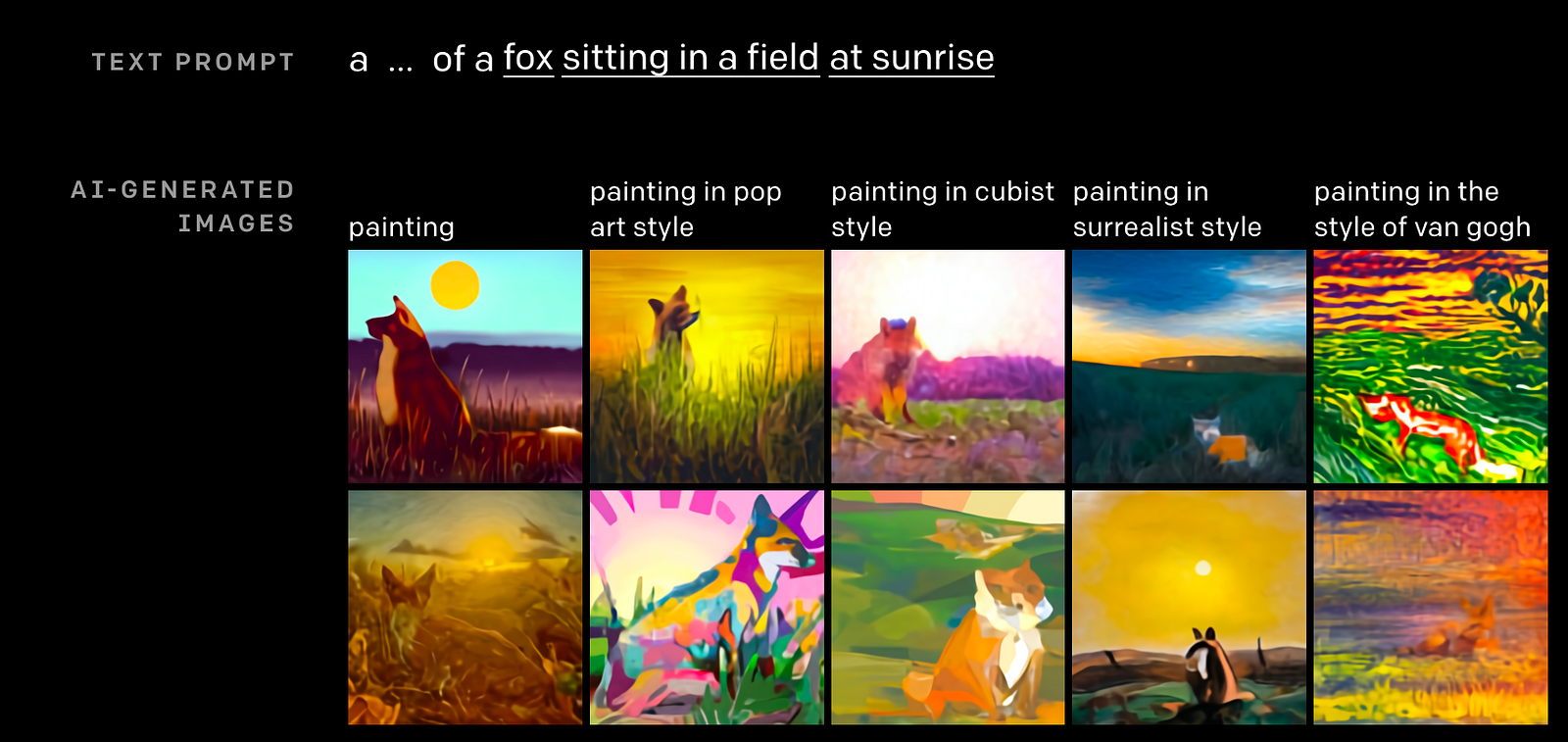

Dall-E can paint

We have seen previous advances of deep neural networks like Generative Adversarial Network (GAN) in imitating the style of certain artists. DALL-E takes it further by translating text command into images.

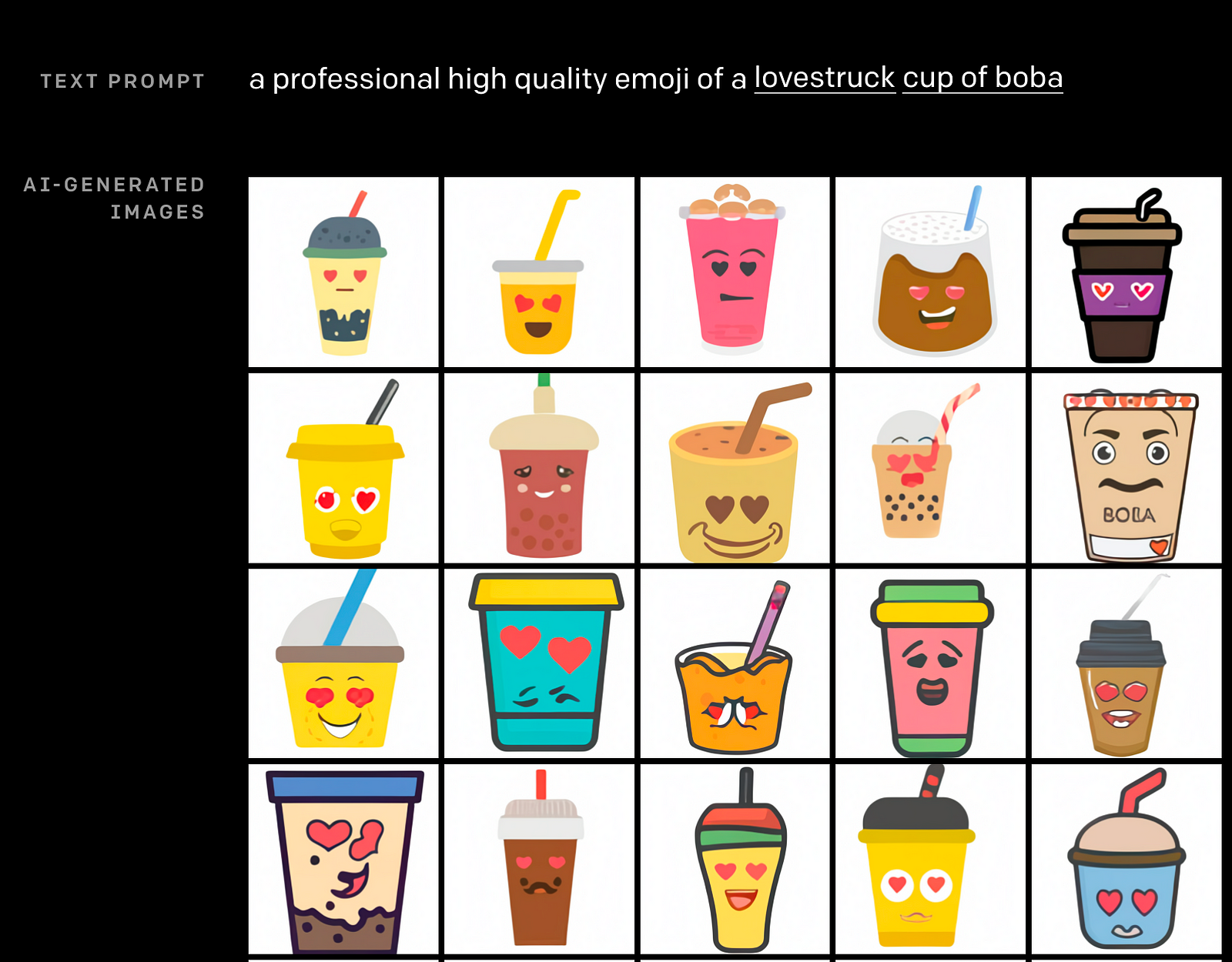

Dall-E can illustrate

Dall-E is also able to generate illustrations that are anthropomorphized and even emojis.

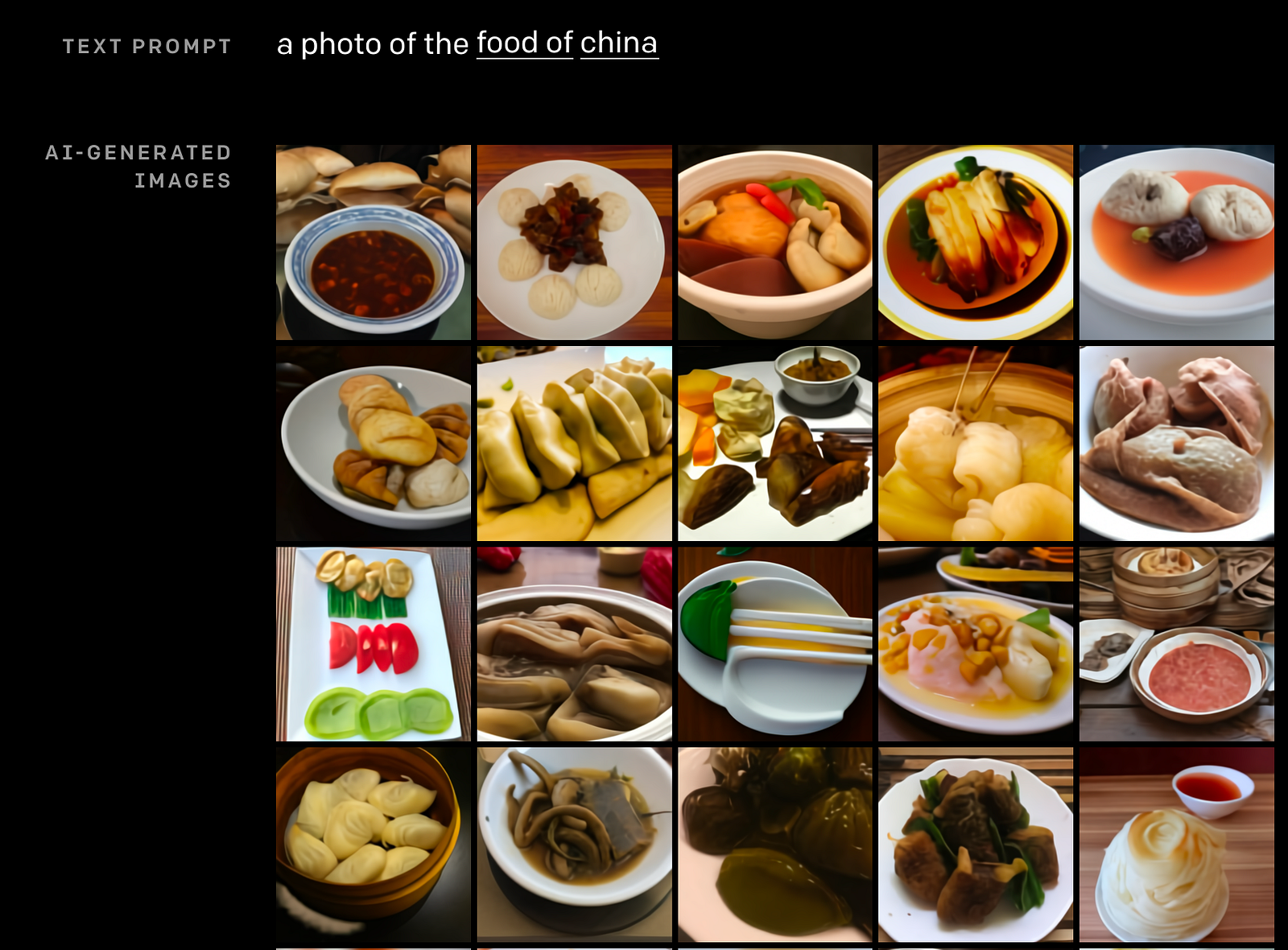

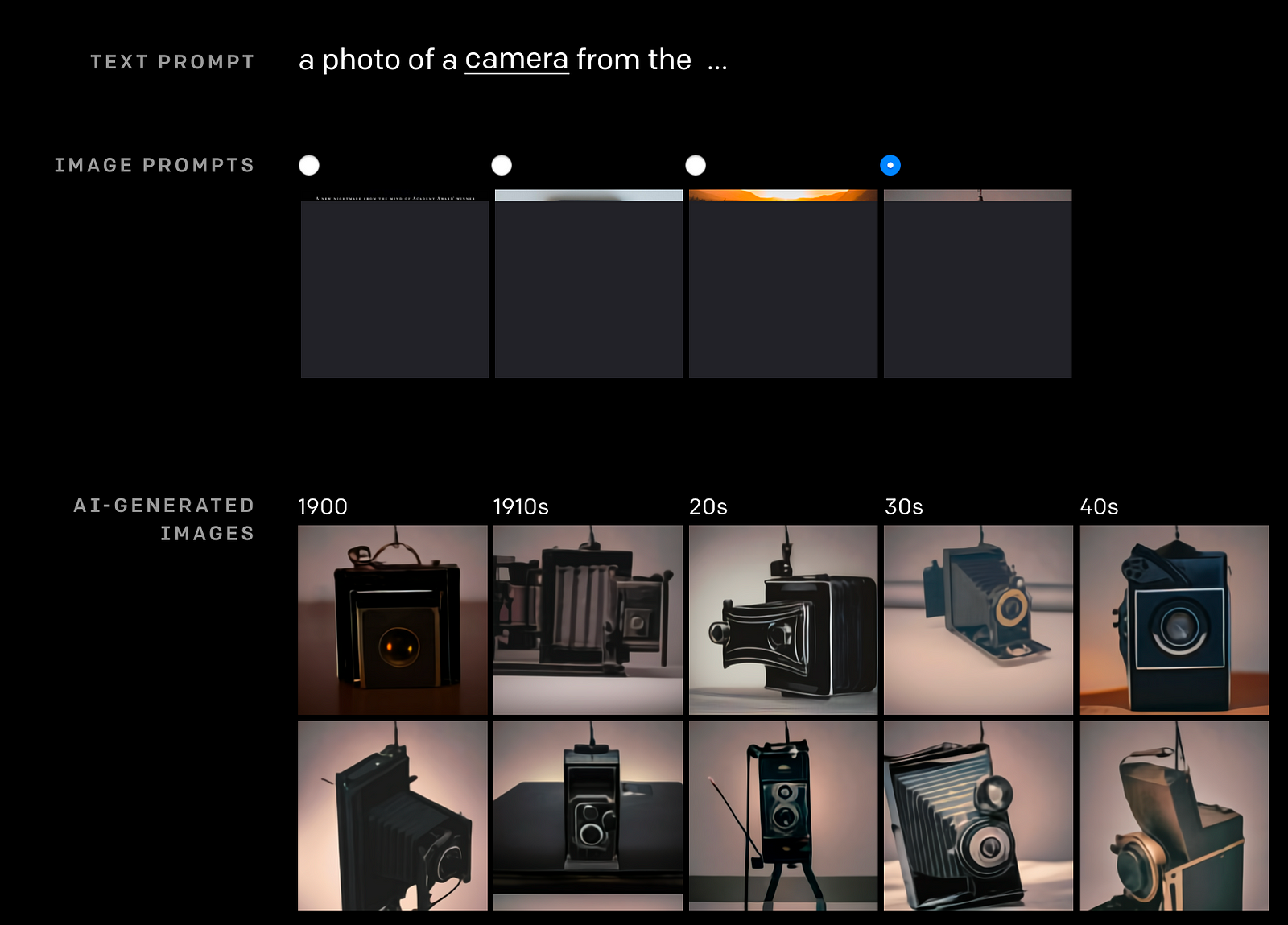

Dall-E knows geography and history

Most interestingly, Dall-E seems to have traveled through space and time and learnt about geographic and historical facts of the world.

What can Dall-E NOT do?

Now, Dall-E might seem fascinating, but it is by no means perfect. Here are three specific instances where Dall-E fails.

Dall-E might fail on some texts.

Dall-E might work very well on certain phrases but might fail on semantically equivalent texts. That is why the authors of Dall-E sometimes need to repeat themselves in their prompt to increase the success rates of the image generation task.

Dall-E may fail on unfamiliar objects

Dall-E is sometimes unable to render objects that are unlikely to occur in real life. For instance, it is unable to render a photo of a pentagonal stop sign, instead showing results of a conventional octagonal stop sign.

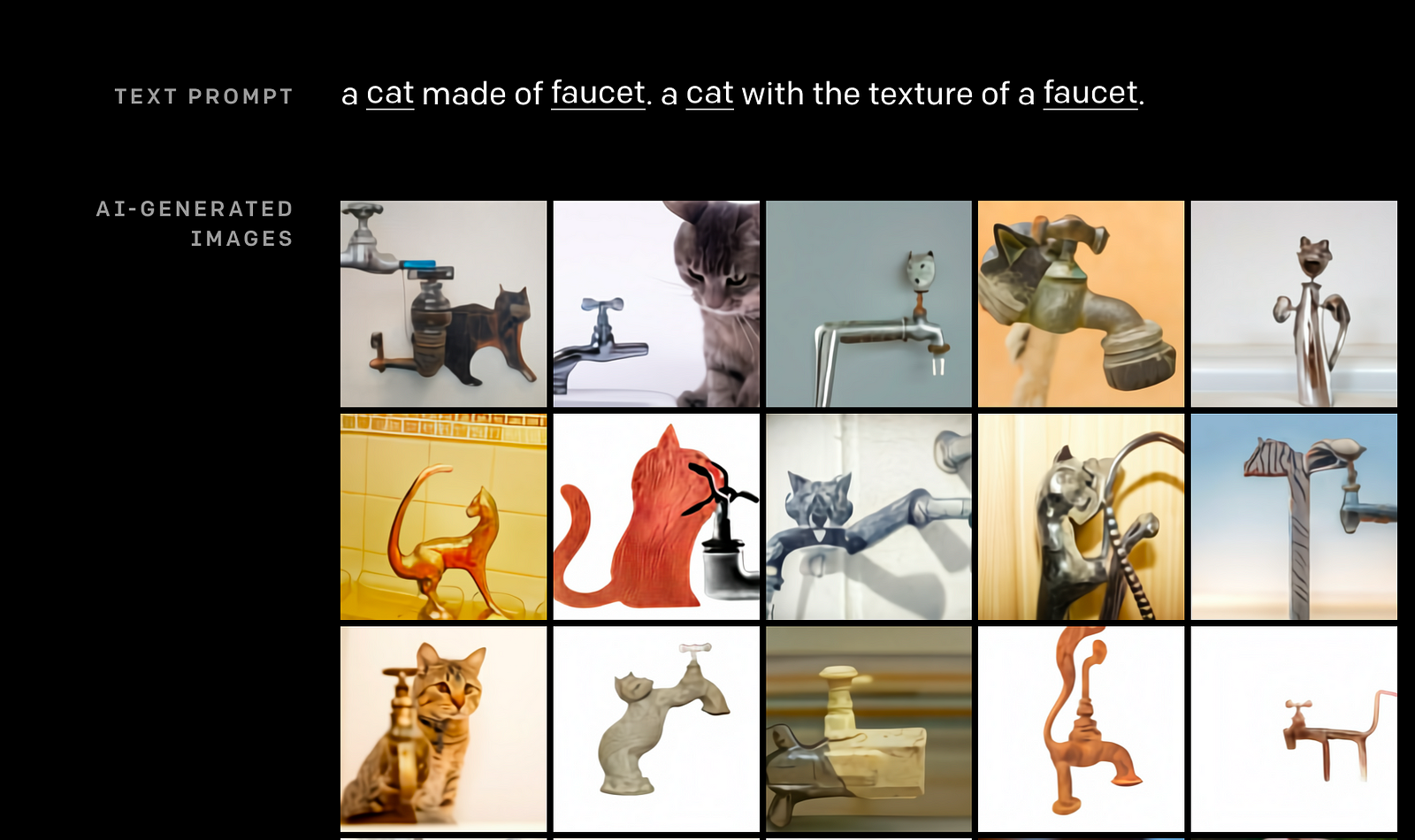

Dall-E may take shortcuts

When prompted to combine objects, Dall-E might instead opt to draw the images separately. For instance, when prompt to draw an image of a cat made of a faucet, it instead draws a faucet and a cat side-by-side.

What’s next?

OpenAI previously licensed GPT-3 technology to Microsoft. Similarly, OpenAI might license DALL-E to tech giants who will have the resources to deploy and govern DALL-E effectively.

Dall-E is extremely powerful and conceivably has monumental societal implication. If generative adversarial networks (GANs) can be used to generate deep fakes, imagine the possible negative repercussions of DALL-E in generating fake images and proliferating fake news.

Some of the ethical issues that Dall-E need to address are hairy and ambiguous. Such issues need to be addressed before we can expect a widespread adoption of this artificial intelligence technology in our everyday lives. These complex issues include

- Potential bias in model outputs (what if DALL-E under-represents minority groups?)

- Model safety and accidents (i.e. how do we prevent the AI from accidentally doing something)

- AI Alignment (i.e. getting the model to do what you want it to do)

- The economic impact on certain professions (think about the illustrators whose jobs might be displaced by such technology.)

That aside, the deep learning and machine learning space is an extremely exciting place to be in right now. Check out more about OpenAI in their page here.

If you like this post, you can check out my other posts or connect with me on LinkedIn.