Learn Data Science Now: Conditional Probability

Chihuahua, Muffins and Confusion Matrix in Machine Learning

Conditional probability is one of the fundamental concepts in probability and statistics, and by extension, data science and machine learning.

In fact, we can think about the performance of a machine learning model using confusion matrix, which can be interpreted using a conditional probability perspective.

In this blog post, we will cover all that and more—

- Introduction

- A formal definition of conditional probability

- Intuition to conditional probability: Moving to a new universe

- Conditional Probability in Machine Learning and Confusion Matrix

- How to learn more probability

Let’s get started.

Conditional Probability Introduction

Conditional probability is a method to reason about the outcome of an experiment, based on partial information.

Without using any formulas, let’s try to understand conditional probability intuitively using an example.

Question: You throw a 6-sided dice. Your friend looks at the roll and tells you the roll is an even number. How likely is it a 6?

Before your friend looks at the roll, there are 6 possible outcomes from a roll (1, 2, 3, 4, 5, 6)

After your friend informs you that the roll is even-numbered, we are left with 3 possible outcomes — 2, 4, 6.

Thus, out of three possible scenarios, one of them is such that we roll a 6. The probability of rolling a 6 given that the roll is an event number is thus 1/3.

Conditional Probability Formal Definition

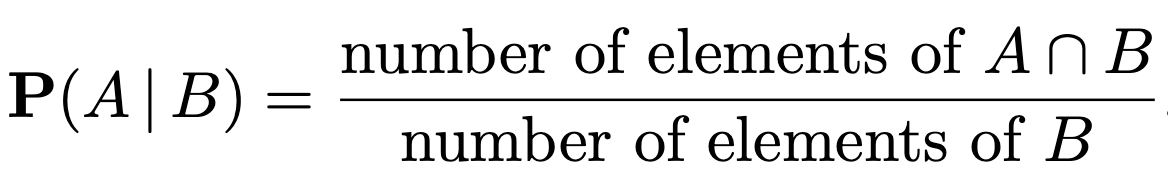

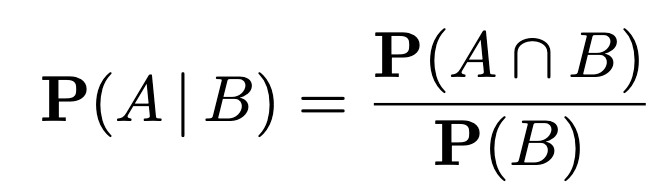

We can now seek to formalize that intuition.

We can now say that if all outcomes are equally likely, as is the case of the dice roll above, we can say that

Generalizing further, we can say that

With this concept, we can also seek to answer the following questions —

(b) You are a password hacker. Your advance hacking skill informs you that Michael’s email password has 8 lower-case letters. If all password combination is possible, what is the chance that the password is ‘password’?

(c) How likely is it that a person has HIV-positive given that the person was tested negative for HIV?

(d) You built a machine learning model to classify pictures of chihuahua and muffin. Your classification model informs you that picture X is a photo of chihuahua. How likely is it for the model to be wrong?

Notice that the pattern of the questions that conditional probability seeks to answer is in the form of ‘given X, find the probability of Y’.

Conditional Probability Intuition: Moving to a New Universe

One of the intuitions of conditional probability is that we can view conditional probability as a probability law on a new universe B, because all of the conditional probability is concentrated on B.

In solving conditional probability problems P(A|B), we can imagine transporting ourselves to a world where the given event B must have happened, and we can simply looking for a simple probability of event A in that universe.

Let’s compare two scenarios. In both scenarios, you want to hack into Michael’s Dunder Mifflin mail. By default, there is no limit on the length of the password.

Scenario A) Without any additional information, what is the chance that Michael’s password is ‘1234’?

In this scenario, the probability that Michael’s password is 1234 is close to zero, or even infinitely small. This is because there are infinite combinations of passwords out there, and 1234 is simply one of them.

Scenario b) Given that Michael’s email password contains only the numbers 1, 2, 3, 4, what is the chance that the password is ‘1234’?

In scenario B, we can imagine us transporting into a universe where all email passwords must have 4 characters and contain 1, 2, 3 and 4. Now, in this world, we ask ourselves, what is the probability that these 4 numbers are arranged in the sequence 1234?

In this world, the passwords only be 1 of the 24 combinations in 1234, 1243, 1342, 1324, 1423, 1432, 2134, … ,4321

Thus, the conditional probability is 1/24.

With such intuition, we can solve simple problems with more ease and without needing to resort to mindless math.

Conditional Probability in Machine Learning and Confusion Matrix

Conditional probability is useful in the context of evaluating machine learning models. I’ll illustrate with an example.

You built a machine learning model to classify pictures of chihuahua and muffin.

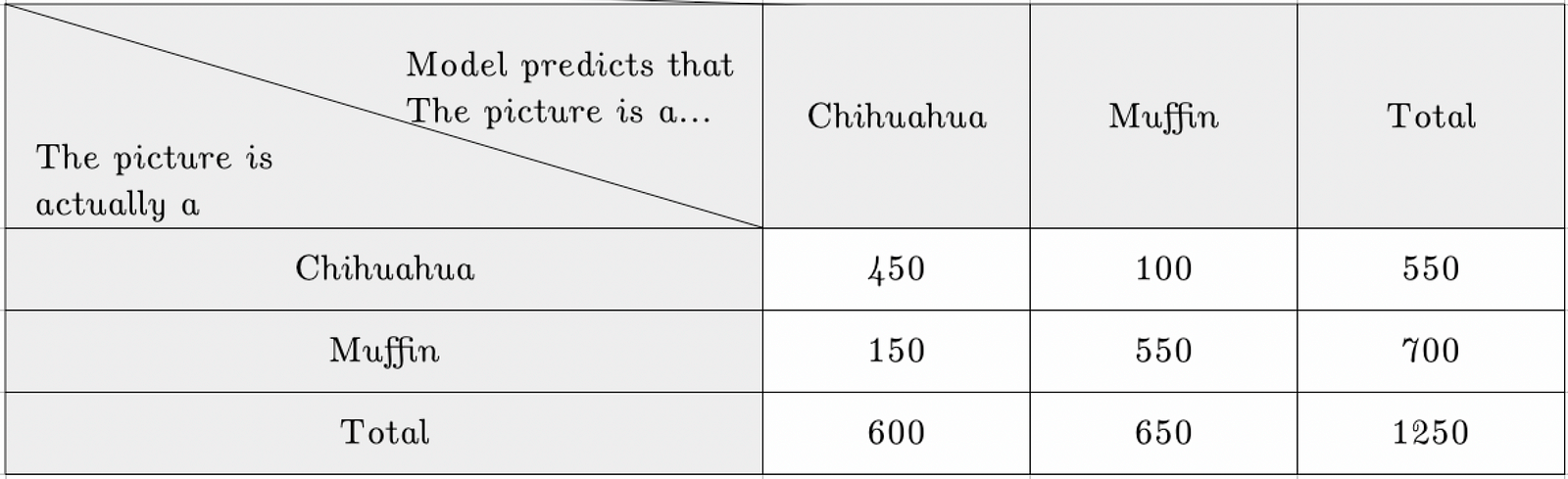

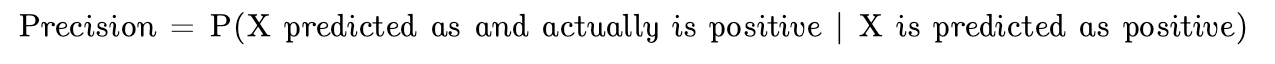

The following results are obtained.

Now, you feed a new picture to your machine learning model. Given your classification model predicts that picture X is a photo of a chihuahua, how likely is it that the photo is actually a photo of a chihuahua? Mathematically, this translates to the following —

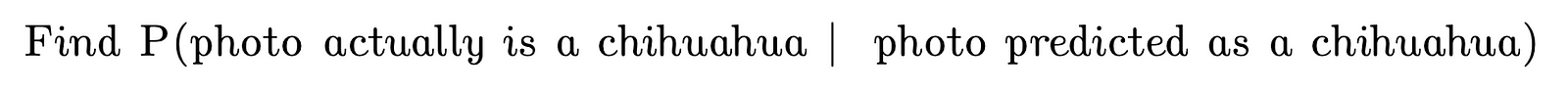

The answer is 3/4. How do we know that? Let’s find out, step-by-step.

- Since it was given that the model made a prediction of ‘chihuahua’, we will only focus on the yellow highlighted column and ignore the other column. In other words, we transport ourselves to a ‘new universe’ where the model only can predict that the picture is a a chihuahua.

- In this new universe, the model made 600 predictions in total.

- Out of the 600 predictions made, 450 of those are correct, while 150 are incorrect.

- Thus, the conditional probability is 450/600, which simplifies to 3/4.

What you have seen is a confusion matrix, commonly used in machine learning. Intuitively, a confusion matrix is a table that tells us how well your model has performed after it has been trained.

More concretely, a confusion matrix is a table with two rows and two columns that reports the number of false positives, false negatives, true positives, and true negatives, as seen above.

An understanding of confusion matrix and its associated metrics require an understanding of conditional probability.

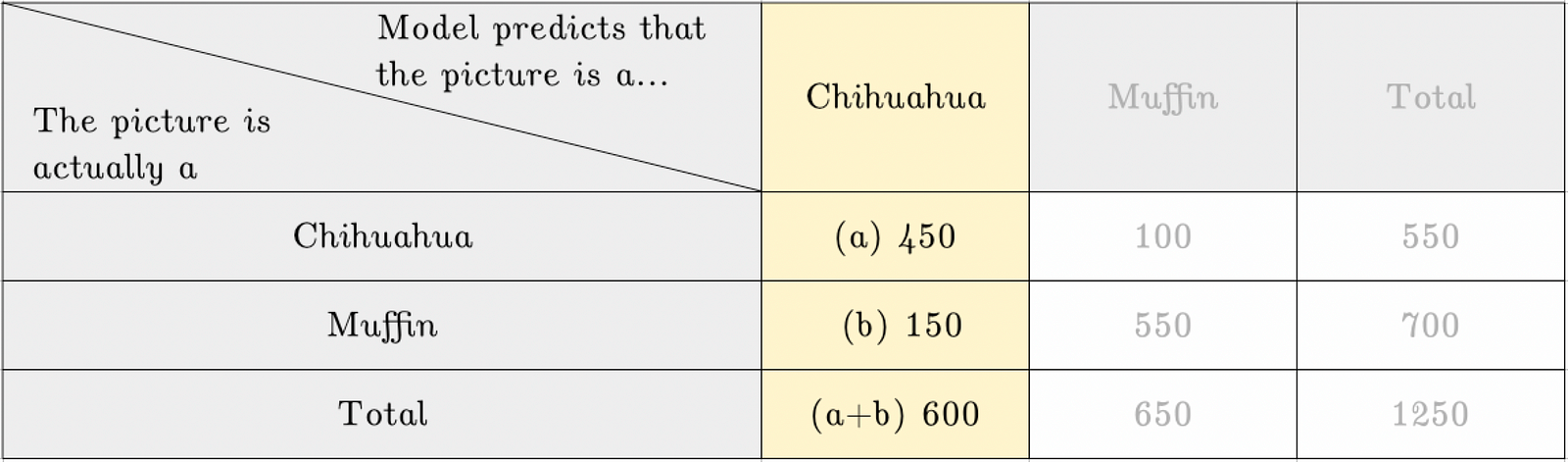

In fact, what we have just calculated is a metric called precision. In fact, precision, and some other metrics, are formalized as such.

Can you calculate recall and specificity using the table above?

Conclusion

Conditional probability is a way for us to logically quantify uncertainty under different conditions. It is an important tool in the toolbox of data scientists. Be sure to master it if you’re serious about being a good data. Here I provide a few resources that I recommend.

For the new learner who also wants to pick up programming and coding, HarvardX’s Data Science — Probability (PH125.3x) on Datacamp* will provide a gentle introduction to probability while allowing you to implement probability in R. Moreover, this also comes with a motivating case study on the financial crisis of 2007–08 — potentially a project that you can further work on and showcase on your portfolio.

For the advanced learner, I suggest taking the HarvardX Stat 110: Introduction to Probability*. This class is by far one of the most rewarding I have attended. Professor Blitzstein, one of my favourite professors for probability, covers the topics rigorously and intuitively.

Also, feel free to connect with me on LinkedIn if you have any questions, or simply want to learn data science together.

This is part of a series on probability. It’s bite-size data science that you can learn now in 5 minutes.

- Probability Models and Axioms

- Probability vs Statistics

- Conditional Probability (you’re here!)

- Bayesian Statistics (coming soon)

- Discrete Probability Distribution (coming soon)

- Continuous Probability Distribution (coming soon)

- Averages and the Law of Large Numbers (coming soon)

- Central Limit Theorem (coming soon)

- Joint Distributions (coming soon)

- Markov Chains (coming soon)

(Edit 2022: I discontinued this series so this is the final post in this series)

Reference and Footnotes

[1] Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248–255).

*These are affiliate links to courses that I recommend. This means that if you make a purchase after clicking the links, I will receive a percentage of the fees, at no additional cost to you.